Conquering the Rapids: A Deep Dive into Stream of Data APIs

In the fast-paced digital world, data flows like a torrent, and harnessing this stream is crucial for real-time insights, adaptive applications, and dynamic user experiences. Enter the realm of Stream of Data API – powerful conduits that transform data from a sluggish pond into a raging river of valuable information. This comprehensive guide equips you with the knowledge and tools to navigate the rapids of streaming APIs, empowering you to unlock their potential and conquer the ever-changing data landscape.

Delving Deep: Unveiling the Anatomy of a Stream of Data API

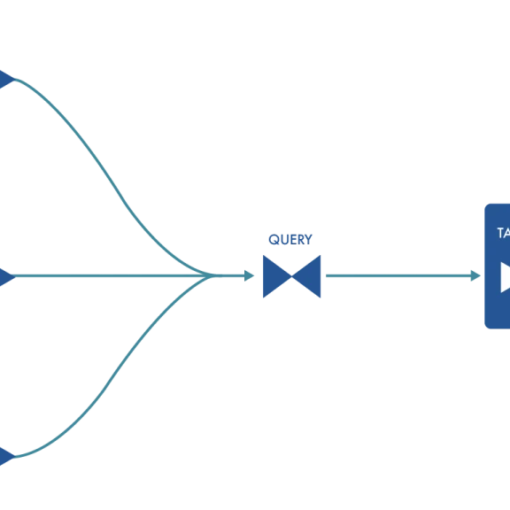

Unlike traditional APIs that serve information on demand, Stream of Data APIs operate in real-time, pushing data updates as they occur. Imagine them as live news feeds, broadcasting the latest developments as they unfold, rather than static snapshots taken at specific points in time. This unique characteristic demands a distinct structure:

- Push Model: Data flows one-way, from the server to the client, continuously delivering updates without explicit client requests.

- Event-driven Architecture: Data is delivered as discrete events, triggered by changes in the underlying system or user interactions.

- Scalability and Fault Tolerance: APIs must handle high volumes of data and gracefully recover from network interruptions or system failures.

- Data Formats: Streaming APIs often utilize efficient data formats like JSON or MessagePack for low latency and rapid transmission.

Unleashing the Power: Applications of Stream of Data APIs

These dynamic APIs unlock a wealth of possibilities across diverse domains:

- Financial Trading: Real-time stock quotes, trade execution updates, and market analysis fuel data-driven investment decisions.

- Social Media Monitoring: Track trending topics, brand mentions, and user sentiment in real-time, shaping targeted marketing campaigns and responding to customer concerns instantly.

- IoT Sensor Data: Analyze data streams from connected devices, enabling predictive maintenance, proactive alerts, and optimized resource utilization.

- Fraud Detection: Monitor transactions for suspicious activity in real-time, preventing financial losses and safeguarding user security.

- Live Games and Events: Deliver real-time scores, player statistics, and fan reactions, enriching user experiences and fostering interactive engagement.

Navigating the Tributaries: Popular Stream of Data API Platforms

With diverse options available, choosing the right platform is critical:

- Apache Kafka: A robust open-source platform for high-throughput data streaming, widely used for building scalable real-time applications.

- Amazon Kinesis: A cloud-based streaming service from AWS, offering efficient data ingestion, processing, and analysis capabilities.

- Google Cloud Pub/Sub: A highly scalable and reliable serverless pub/sub messaging service for real-time data delivery on the Google Cloud Platform.

- Microsoft Azure Event Hubs: A cloud-based event ingestion service offering reliable event routing and storage capabilities on Azure.

- Pulsar: A distributed streaming platform known for its flexibility, scalability, and low latency, offering strong competition to Apache Kafka.

Taming the Challenges: Mastering Streaming API Development

While powerful, stream of data APIs present unique challenges:

- Complexity: Handling real-time data streams requires careful attention to concurrency, error handling, and data resilience.

- Data Volume and Velocity: Scalability and efficient data processing are crucial to avoid bottlenecks and ensure timely insights.

- Security: Protecting sensitive data in motion and safeguarding against denial-of-service attacks demands robust security protocols.

- Monitoring and Observability: Continuously monitoring data pipelines and infrastructure for potential issues is vital for maintaining system health and data integrity.

Charting the Course: Best Practices for Successful Streaming API Integration

To navigate the rapids with confidence, embrace these best practices:

- Define Clear Use Cases: Identify your specific needs and choose the API platform that best aligns with your application’s requirements and scaling ambitions.

- Embrace Microservices Architecture: Break down your application into smaller, independent services to simplify development, improve fault tolerance, and facilitate scaling.

- Utilize Streaming Data Frameworks: Leverage existing frameworks like Apache Spark or Flink to efficiently process and analyze real-time data streams.

- Implement Robust Error Handling: Design fault-tolerant systems that gracefully handle network interruptions and data errors, minimizing downtime and data loss.

- Prioritize Security: Implement strong authentication, authorization, and encryption mechanisms to protect sensitive data in transit and at rest.

The River Never Sleeps: The Future of Stream of Data APIs

The streaming API landscape is constantly evolving, with exciting trends emerging:

Edge Computing: Processing data closer to the source, at the edge of the network, minimizes latency and enables faster real-time decision-making.

Stream Processing Engines: Real-time analytics and filtering technologies like Apache Kafka Streams or Apache Flink empower immediate action on streaming data.

Artificial Intelligence and Machine Learning: Integrating AI and ML algorithms with streaming APIs allows for real-time anomaly detection, predictive analysis, and automated responses based on data insights.

Conclusion: Riding the Data Waves with Confidence

Stream of Data APIs are potent tools, offering a dynamic window into the ever-flowing sea of real-time information. By understanding their architecture, capabilities, and challenges, you can navigate the rapids with confidence, harnessing their power to build adaptive applications, deliver real-time insights, and stay ahead of the ever-changing data curve. Remember, mastering this turbulent landscape requires continuous learning, experimentation, and a spirit of exploration. So, dive into the stream, embrace the challenges, and emerge a data champion, riding the waves of information to create impactful applications that thrive in the dynamic digital world.

You may be interested in:

API Integration: Bridging the Digital Divide